Improving the depth of insights from unmoderated research tools

Working with Sprig to create a possible AI solution

Scroll ↓

Unmoderated research is often lacking in context and depth compared to moderated methods.

How can we improve this for user researchers?

👓 The Specs

Client: Sprig

Timeframe: 16 weeks

My role: UX Researcher

Team: Jess Lilly (UX Researcher), Matthew Finger (UX Designer), Arnav Das (UX Designer)

Supervisor: Auzita Irani, research manager for Sprig

Methods: Generative survey, semi-structured user interview, user persona, Wizard of Oz, usability test, heuristic evaluation

Tools: Qualtrics, Figma, Excel, Miro, Zoom, Animaze

🤝 Our Partnership with Sprig

Sprig is a B2B company which provides mid- to large-sized companies with user research tools. Some of their services include recruiting for interviews, in-product surveys, concept and usability testing, and concept testing.

📋 The Plan

Develop the problem space

Methods: Stakeholder interview, competitor analysis

Identify user needs and design implications

Methods: User interviews, survey, affinity diagram, user persona

Ideate and receive feedback

Methods: Wireframe, prototype

Evaluate final design

Methods: Wizard of Oz user evaluation, expert heuristic evaluation

❗️ The Problem Space

Who is Sprig? What do they want to achieve? What niche do they occupy? Who are their competitors? Who is their user base?

We set up a remote, semi-structured interview with Auzita Irani, Research Manager at Sprig.

Sprig faces the following problems:

Surveys are rigid in design and inflexible.

Rich, contextual data is often lost due to the nature of unmoderated methods.

👩💻 Competitor Analysis

We looked at other research tools most frequently used by researchers in the industry.

We found surveys, mini surveys, and live interview capabilities to be popular tools used by websites such as Qualtrics, UserTesting, Survicate, and Lookback.

👥 The Stakeholders

Defining the users was a little more complicated than it may have seemed at first glance. There existed Sprig’s users, mid-sized companies (which we dubbed Level 1 or L1 users), and the companies’ research participants (which we dubbed Level 2 or L2 users).

It was important for us to take both into consideration; research tools are two-sided.

🗣The User Research Process

For the research stage, we planned three stages to explore user needs and pain points.

Generative Survey

Our first survey was meant to understand the general pain points of Sprig’s Level 1 users as a starting point for further investigation.

We were easily able to recruit participants given that we had access to a Slack server with experienced researchers.

Our findings:

👍 Users like remote user research tools because they are convenient.

📈 There is a learning curve associated with online research tools.

🕵 Moderated research allows users to delve deep into contextual details.

Semi-Structured User Interview

Using our UX research contacts, we recruited professionals to answer our questions about how they use research tools in their job.

Our findings:

😣 Users are frustrated with irrelevant answers in surveys due to misinterpretation or otherwise.

👫 Moderated research allows for rapport building which is key to understanding a user fully.

Sample Questions:

Tell me about a recent project in which you used online research tools.

Can you tell me about your thought process when choosing between moderated and unmoderated research methods?

Sample Questions:

Approximately how many years of experience do you have working with user research tools?

What challenges and limitations have you faced using online research tools?

📝 Notes:

The majority of our participants were researchers as opposed to designers or product managers.

These demographics may have different needs!

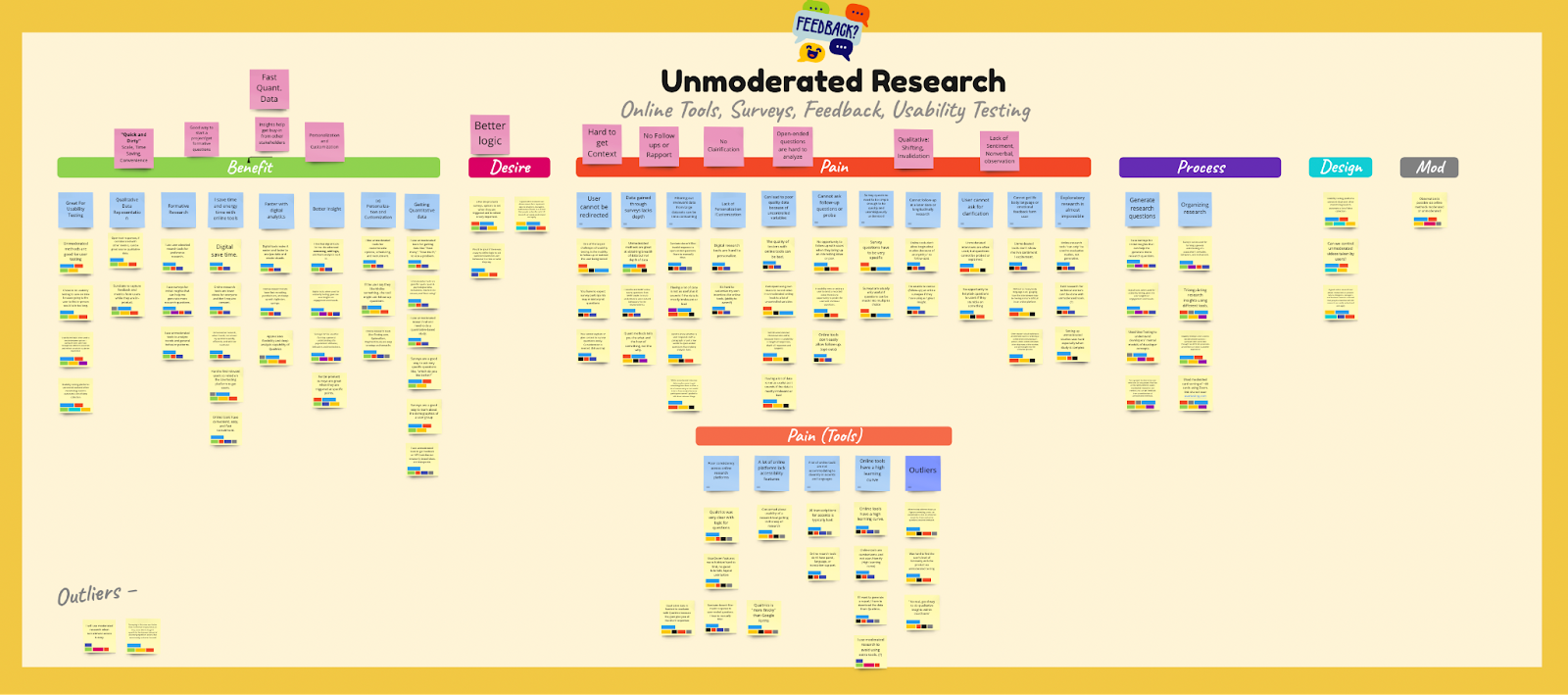

📊 Analysis

We created notes in an excel doc and transferred them to a Miro board, where we tagged each insight.

At last, we could see the bigger picture and consider the user needs and design implications from our findings:

The ability to reach a diverse audience

Easy to use interface with customizable questions

Follow-up inquiry to dive deeper into insights

Compelling to use to keep participants interested

A way to obtain contextual details

To help us characterize our user base further, we created user personas.

👩🦰 User Personas

Danny, 40 y/o Male

Background

Danny is the lead user researcher at a small startup in Chicago called Flipper, a used clothes company.

Goals

Get buy-in from the entire team to invest more resources into research.

Set up a smooth and efficient system to conduct research within the organization.

Motivations

Wants to get product managers actively involved in research.

Have an easily accessible pool of users to conduct research.

Frustrations

The price/value ratio of research tools.

Often has to overstretch himself because his UX team is small.

Travelling for work hampers his research.

Prisha, 29 y/o Female

Background

Prisha is a senior UX designer working at a large tech company in London.

Goals

Have all research insights in one place.

Collaborate with her team to derive the best insights possible.

Motivations

Wants to drive more efficiency in research methods within her team.

Wants to be able to conduct quick preliminary research on her own or through her team when needed.

Frustrations

Time wasted in setting up research tools instead of using it.

Convincing her team of designers of the value of research.

Shortage of specialized researchers for her prouduct vertical.

✍🏻 Ideation and Feedback

So, we know what the users want and what they struggle with. It was time to start ideating a possible solution.

💡 Idea 1

V-Search Video Research

Modeled after TikTok, which elicits free information sharing of complaining and boasting, it contains customizable prompts which engage users in research questions.

💡 Idea 2

Virtual Assistant

A friendly AI takes the place of an interviewer, which can be programmed with questions and follow-up prompts.

V-Search Video Research

Pros:

✔️ Entertaining, novel, trendy, engaging

✔️ Convenient for both L1 and L2 users

✔️ Offers some amount of contextual detail due to video

Cons:

❌ Personal privacy concerns

❌ Users may not be willing to share info about all types of products

❌ May not be understood by all generations

❌ May become obsolete fast as it relies on trends

❌ One-way communication, can’t dive deeper

Virtual Assistant

Pros:

✔️ Novelty of AI makes for an engaging experience

✔️ Breaks down barriers of talking to a human stranger

✔️ Allows for a deeper dive into insights via follow-up prompts

✔️ Easy to implement onto existing site

Cons:

❌ Personal privacy concerns

❌ Follow-up capability is limited by technology; resource heavy

❌ May not be convincing

❌ Culture, language, background considerations

🎨 Wireframe

✏️ Prototype and Testing

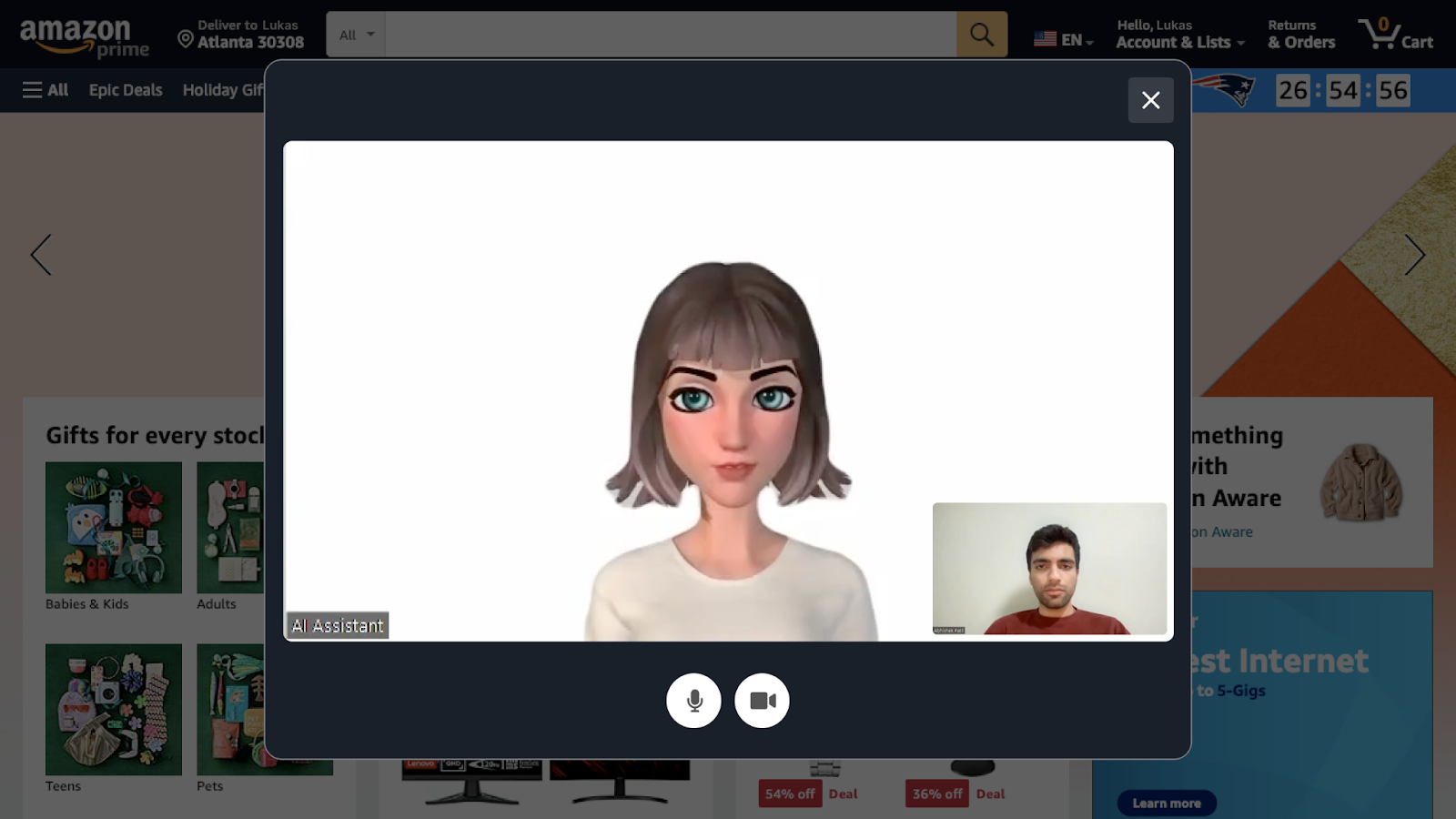

How could we test a prototype for a concept that couldn’t be fully realized due to technological limitations?

We came up with a fast, cheap, and efficient solution:

🧙 Implement the Wizard of Oz Method.

The plan was:

Write a script including potential follow-ups to represent pre-programmed questions.

Use a face and voice filter to simulate the Virtual Assistant.

Stick to the script and gather feedback on a website.

We were worried at first that it would not be convincing and realistic but decided to go ahead with it.

Additionally, we faced problems finding a realistic voice modulator so we ended up going without it and used my best voice acting instead.

Demonstrating the idea.

Here I test out Animaze and work on designing an avatar for a usability test.

We conducted a usability test under the pretext of an Amazon review, a website most people are familiar with, and had one team member act as a moderator and note taker, while I gave the fake interview as a Virtual Assistant using the Animaze filter.

Afterwards the moderator interviewed the participants about their experience using the Virtual Assistant.

🤖 3 out of 5 participants believed they were talking to an AI.

📝 Evaluation

We needed feedback on our prototype from an expert so we recruited a researcher with industry experience who uses unmoderated research tools frequently.

We had them do a heuristic evaluation of our prototype and also received feedback on the concept through an unstructured interview.

Finally, we compiled our results into an affinity board.

📄 Summary of Findings

❌ Users are unsure if their answers were fully captured.

✔️ The interview was entertaining and engaging.

❌ There were visual bugs.

❌ There were privacy concerns.

✔️ L2 users would be motivated to use the AI Assistant over a traditional interview.

💭 Final Thoughts

The problem statement was not easy to find a solution to!

Though we came up with novel ideas, it is difficult to implement due to technological limitations.

Even if the AI Assistant were to work perfectly as intended, we would still face the problem of user privacy.

However…

✔️ The AI Assistant fulfilled some objectives of our original problem statement.

✔️ It broke down barriers between interviewer and interviewee.

✔️ It’s convenient to implement, much like a survey.

✔️ It allows for a more in-depth line of questioning without the interviewer having to be there.

✔️ It allows for some contextual depth due to video being able to capture emotion and expression.

What I Would Have Done Differently

🔭 Make objectives for each step clearer and generate more helpful visualizations.

🌎 Collect more diverse feedback on the prototype to target a larger demographic.

🧐 Receive more expert evaluation from different perspectives.

🧭 Next Steps

Improve the prototype based on user and expert feedback.

Give participants more control and more context for the interview.

Work out visual bugs.

Continue expert and user evaluation.

Test with more research scenarios.

Improve the breadth and depth of the script, allowing for more customizability.